Neutron stars merge roughly every 15 seconds somewhere in the visible universe. These mergers release tremendous amounts of energy in the form of gravitational waves and electromagnetic radiation, which encodes information about the extreme nuclear matter they are made of. If such an event can be localized on the sky with gravitational waves quickly enough, telescopes can be pointed to search for the electromagnetic radiation released by the colliding stars. The electromagnetic radiation can then reveal how elements heavier than iron – such as gold – are created, and sheds light on the abundance of these heavy elements in the universe. However, localizing the sources using gravitational waves is time-critical; the electromagnetic radiation decays over the course of hours to days and so precise maps of the potential sky locations must be promptly communicated to telescopes.

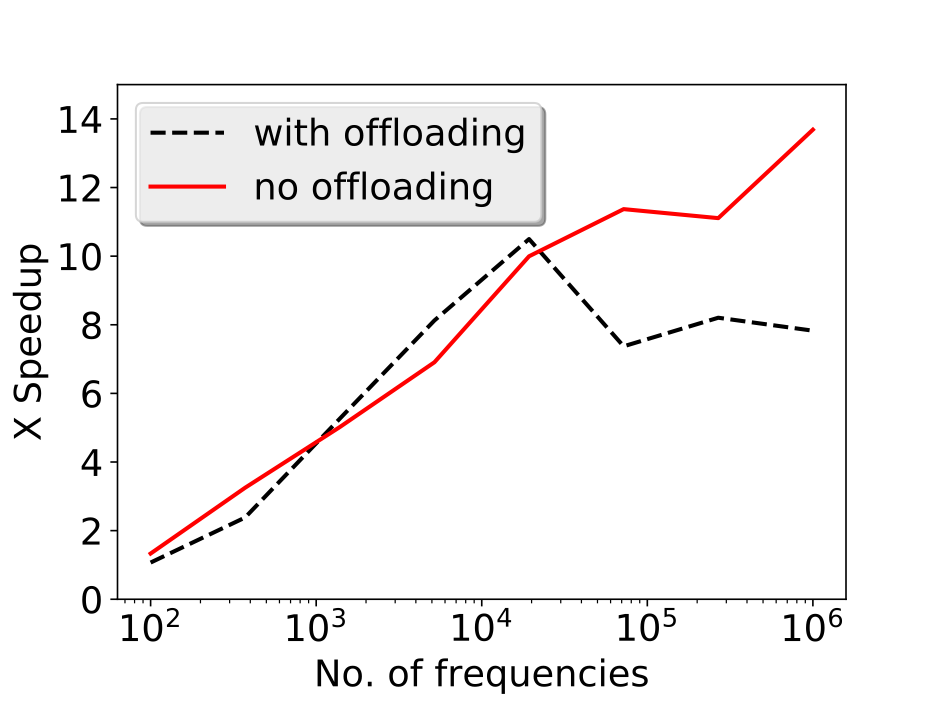

ADACS has taken a significant step towards making this a reality by optimising a standard gravitational wave analysis package (LALSuite; used in this case for the generation of waveform templates for merging compact objects; see here for more details) for execution on GPUs. For meaningful problem sizes (involving >104 frequencies), order-of-magnitude speed-ups have been obtained, providing the best possible chance for the rapid telescope pointing required to unravel the mysteries of heavy element production in the universe.

A paper presenting the performance gains of this optimised code in the context of realistic Bayesian inference calculations has been published in Physical reviews D by Dr. Colm Talbot, Dr Rory Smith, Dr Greg Poole and Dr Eric Thrane, and can be viewed here.

“ADACS support on this project has significantly accelerated our research plans and capabilities. We have now been able to significantly increase the impact that our work will have in analysing gravitational-wave signals. The close relationship between astronomers and ADACS is unique in gravitational-wave astronomy and is facilitating world-leading research!” – Dr. Smith, Monash University.

Access to this GPU-optimised version of LALSuite can be obtained here. A python package documenting and illustrating its use can be obtained here (documentation is available here).

Speed-up of ADACS’s LALSuite GPU optimisations as a function of problem size (number of waveform frequencies) in the cases where waveforms are offloaded from the GPU (the “with offloading” case) or not (the “no offloading”; useful in the case where goodness of fits are subsequently computed on the GPU for example). For realistic problem sizes (>104 frequencies), order-of-magnitude speed-ups are achieved.

From the Talbot et al paper: Speedup comparing our GPU-accelerated waveforms with the equivalent versions in LALSuite as a function of the number of frequencies. The corresponding signal duration is shown for comparison assuming a maximum frequency of 2048Hz. The blue curve shows the comparison of a CUPY implementation of TaylorF2 with the version available in LALSuite. For binary neutron star inspirals the signal duration from 20 Hz is∼100 s. At such signal durations, we see a speedup of ∼x10. For even longer signals we find an acceleration of up to x80. The orange curves show a comparison of our CUDA implementation of IMRPhenomPv2, the solid curve reuses a pre-allocated memory buffer while the dashed line does not.