Speeding up Reionization with GPUs

Investigators:

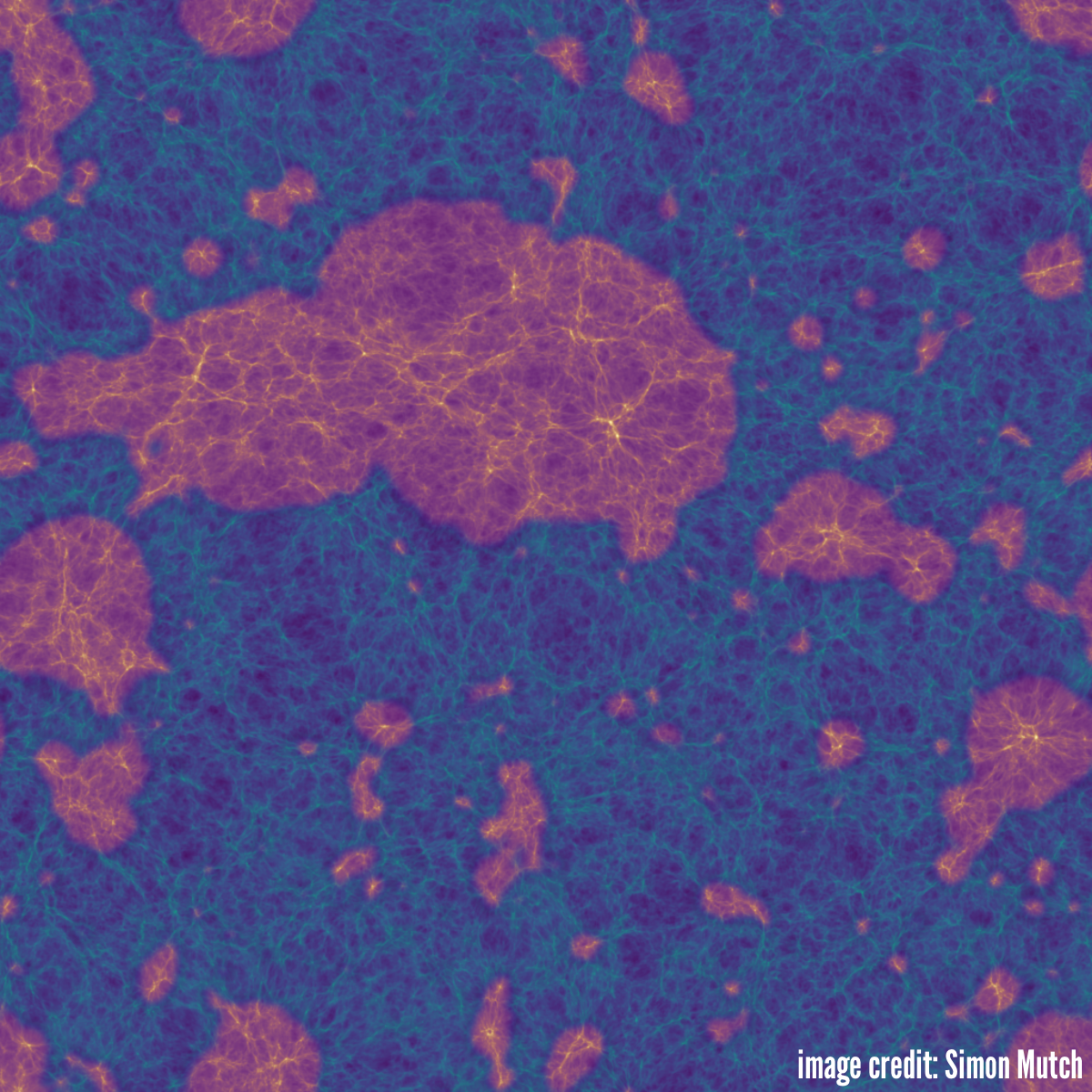

- Simon Mutch (University of Melbourne)

- Paul Geil (University of Melbourne)

- Stuart Wyithe (University of Melbourne)

- Cathryn Trott (Curtin University)

Proposal Summary:

We request the equivalent of 9 weeks of time from a software developer with expertise in C/CUDA (Thrust) to replace a CPU bound, time-intensive algorithm in the Meraxes semi-analytic model with an efficient GPU version. Meraxes is a state-of-the-art semi-analytic galaxy formation model specifically designed to self-consistently couple galaxy growth and Epoch of Reionization (EoR). This is achieved by modelling galaxy formation using traditional semi-analytic techniques, before filtering the simulation volume using an excursion set formalism to identify ionized regions in the inter-galactic medium. Unfortunately, this excursion set formalism is a computational bottleneck in our methodology. At each simulation snapshot, we are required to run the same calculation for every cell in multiple Cartesian grids many times over, resulting in up to ~3×1010 calculations per snapshot. The repetitive, structured nature of this filtering procedure means that an efficient GPU implementation should be viable, greatly speeding up the code and allowing us to take advantage of current GPU infrastructure available to the community. This would in turn allow us to statistically explore the poorly constrained free parameter space of the EoR, including poorly known fundamental quantities such as the escape fraction of ionizing photons from galaxies and their mean free path through the intergalactic medium. Understanding these parameters and their degeneracies with other properties of high-redshift galaxies will be key for interpreting the results of current (e.g. MWA, PAPER, LOFAR) and future (e.g. SKA) radio surveys which aim to explore galaxy formation in the early Universe by measuring 21cm emission from neutral hydrogen during the EoR.