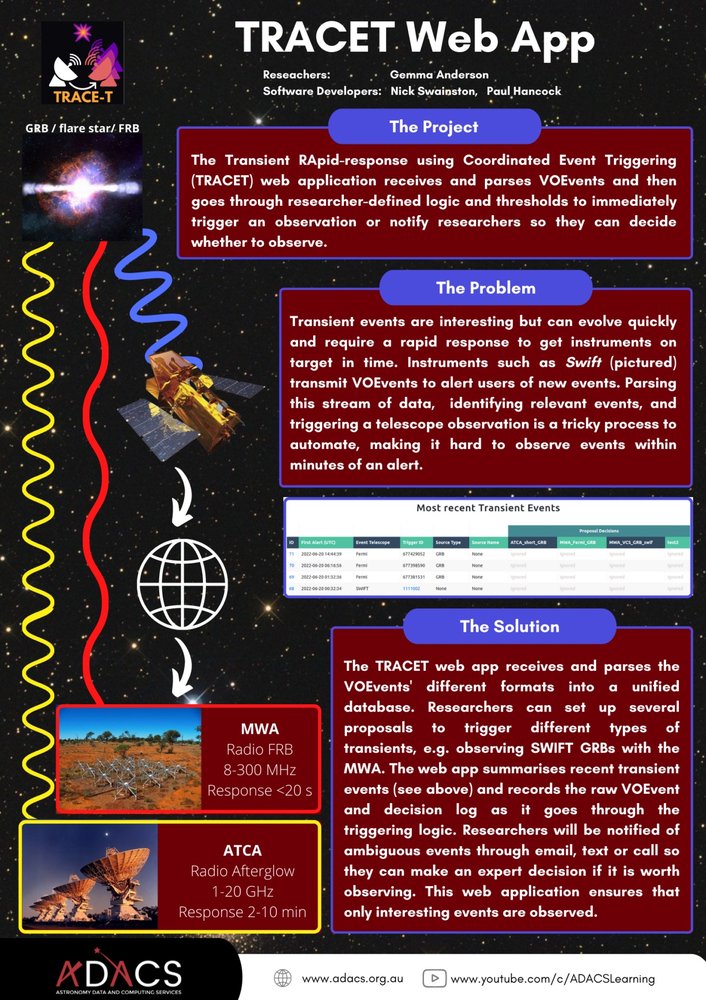

The Transient RApid-response using Coordinated Event Triggering (TRACET) web application was designed to receive notices of transient events via the VOEvent network and decide which events are worth following up with the MWA telescope.

Transient events are interesting but can evolve quickly and require a rapid response to get instruments on target in time. Instruments such as Swift (pictured) transmit VOEvents to alert users of new events. Parsing this data stream, identifying relevant events, and triggering a telescope observation is a tricky process to automate, making it hard to observe events within minutes of an alert.

Over three semesters, ADACS has built on the existing MWA triggering software to create a web app that gives users a more complete and accessible view of the current state of the triggering system. The web app allows users to view all the received alerts, define and edit triggering programs, and to connect the app to both the MWA and ATCA radio telescopes.

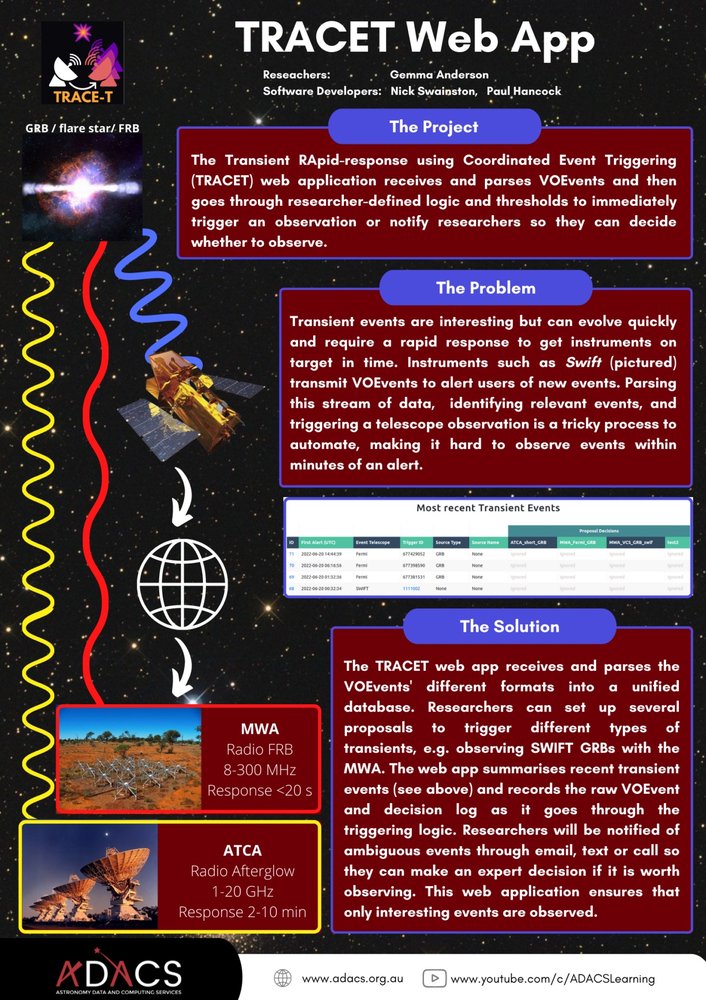

The TRACET web app receives and parses the various VOEvent formats into a unified database. Researchers can set up several proposals to trigger different types of transients, e.g. observing SWIFT GRBs with the MWA. The web app summarises recent transient events and records the raw VOEvent and decision log as it goes through the triggering logic. Researchers will be notified of ambiguous events through email, text or call so they can make an expert decision if it is worth observing. This web application ensures that only interesting events are observed.

Check out some of our other projects.

ADACS collaborated with researchers in Social Network Analysis to improve the performance of their simulation code. This allowed them to tackle research problems that were previously computationally intractable.

BEANSp is a software tool developed to simulate Bayesian Estimation of Accreting Neutron Star parameters. The role of the ADACS support in this project was to analyse the code and suggest and implement improvements.

Parallel Bilby is a gravitational wave inference code that was running inefficiently. ADACS analysed the code to identify bottlenecks and gave recommendations on how to improve its performance.